An interview with Javier Garcia from GL Research

We had the pleasure of speaking with Javier Garcia, founder and lead scientist at GL Research, about a benchmark made by his company on the performance of i.MX8MPlus products on Engicam module.

Hi Javier! Can you please explain what business are your company in and what it's your role inside?

My name is Javier Garcia and I'm the founder and lead scientist at GL Research, an independent private laboratory specialized in Cyber-Physical Systems, the seamless integration of computation, networking and physical processes which holds the potential to reshape our world by enabling a new generation of more intelligent, responsive, precise, reliable and efficient machines.

My name is Javier Garcia and I'm the founder and lead scientist at GL Research, an independent private laboratory specialized in Cyber-Physical Systems, the seamless integration of computation, networking and physical processes which holds the potential to reshape our world by enabling a new generation of more intelligent, responsive, precise, reliable and efficient machines.

GL Research participates in the international applied research effort creating state-of-the-art technologies that are used at the most complex experimental physics facilities ever built by humanity. As an example, our laboratory is a proud CERN Supplier and we have collaborated in the development of technologies that are being used at the Large Hadron Collider and other Big Science experiments around the world.

Then, in order to help our customers to innovate with a faster time to market and a lower total cost, GL Research leverages the acquired know-how by partnering with the world's leading hardware providers to develop reference software solutions in strategic areas such as Embedded Operating Systems, Artificial Intelligence at the Edge and Distributed Monitoring and Control.

What is the purpose of your benchmark?

In 2016 we started working in Industrial, Scientific and Medical applications of Deep Neural Networks (DNN). Over these years, we have witnessed how this technology has been progressively moving from cloud-based deployments powered by high-end GPUs to specialized embedded platforms that are able to take decisions at-the-edge with minimal latency.

As per our experience in the embedded electronics market, we know that when you get marketing information from SoC vendors, you need to be cautious about the potentially biased data they provide. For this reason, we wanted to evaluate on an equal footing the DNN performance for the flagship platforms from different hardware providers in order to be able of assessing our customers about the embedded SoCs that fit their needs best.

What features led you to consider NXP i.MX8M Plus for your benchmark?

In GL Research we have an extensive background working with the previous i.MX families from NXP and we were currently engaged with the standard i.MX 8M offering when the Plus version was announced. The i.MX portfolio has a proven track record of highly successful devices with long-term market availability, so as soon as we knew that a new device equipped with a State-of-the-Art Neural Processing Unit (NPU) was going to debut, we were immediately sure that this had the potential to become a game-changer in the embedded A.I. arena.

For this reason, as soon as we got our brand-new i.MX 8M Plus powered SoM and development kit from Engicam, the first thing we did was checking how this device stands among the crowd in terms of DNN performance.

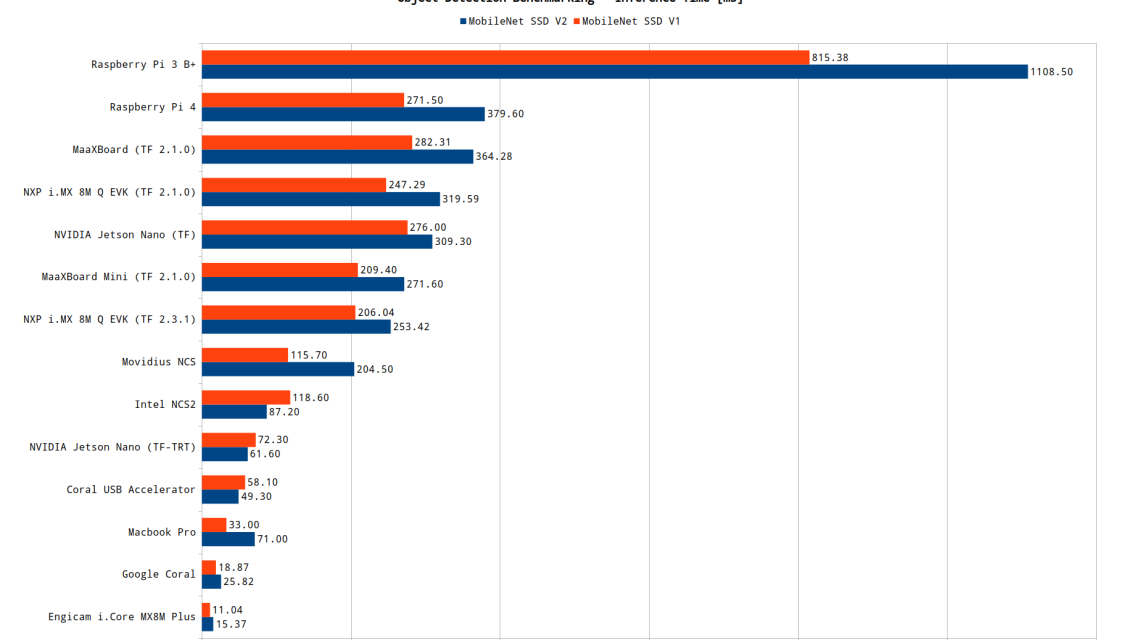

In order to do this, we took a previous benchmark conducted by Monica Houston from Avnet (available https://www.element14.com/community/community/designcenter/single-board-computers/blog/2020/06/18/benchmarking-the-maaxboard-against-google-coral and https://www.element14.com/community/community/designcenter/single-board-computers/blog/2021/02/09/updated-maaxboard-benchmarking-results-maaxboard-mini) to check the A.I. capabilities of the i.MX 8M Quad and Mini versions against other popular embedded platforms in the market, including DNN optimized ones such as Google Coral, NVIDIA Jetson or Intel Movidius. Then, we incorporated the data we got for the i.MX 8M Plus by using the very same benchmarking test software... and it effortlessly jumped to the first place in this ad-hoc ranking!

Could you please share your feedback about i.MX 8M Plus based Engicam's SOM?

First of all, the hardware quality for this product from Engicam is really impressive. Not only you can appreciate the convenient form factor and rock-solid ruggedness of the i.Core MX8M Plus SoM when mounting a passive heat-sink, but the EDIMM 2.0 Starter Kit in which we have it installed is so nicely crafted and its interfaces are so well chosen and placed that the whole setup could be used as an industrial-grade Panel PC in actual commercial products without or with minimal modifications.

In second place, but as important as the hardware quality is, the updated software and the extensive documentation provided by Engicam makes the task of bringing up the SoM for the first time and start developing your custom applications a surprisingly easy and fast experience.

In clear contrast with respect to other vendors in the market, by doing this the Engicam people demonstrate that they are not only focused on the product itself, but that they really care for their customers.

Which results impressed you the most?

Under the light of the extreme performance showed by such a low-powered device as the i.MX 8M Plus is, you could be tempted to think that this is the most impressive outcome from the conducted benchmark. But, as a company with extensive experience working with other Artificial Intelligence optimized SoCs, the most astonishing fact is a little more subtle.

When working with devices from other vendors, you are often forced to use specific and proprietary toolchains that require a steep learning curve to be able of generating optimized models for the custom hardware architectures lying under the hood. For this benchmark, the software was just plain Python code that was executed in all of the platforms under test with minor or no modification in order to benchmark two reference TensorFlow Lite quantized model binaries downloaded from the official TensorFlow site. In this way, we were able to squeeze the full DNN potential of the i.MX 8M Plus without any additional training, just by using our previous experience with the most popular machine learning framework.

Thank you Javier for sharing this very interesting analysis with us and good luck!